There's something undeniably cool about vibe coding. I’ll be the first to admit it, I’ve dived straight in. Be it speed-developing a proof of concept or (designers please forgive me) spinning up a web app instead of poring over Balsamiq (and Figma) mockups. Vibe coding is fast, fun and a great way to get from 0 to POC in a fraction of the time.

I mean what’s not to love? You throw a problem at an AI assistant, it kicks back some code, you copy-paste, tweak, and roll. Need to write a CLI to parse logs, integrate a third-party API, or spin up a Flask app? No problem. One prompt and you're off to the races. In 2025, it's how many devs start projects fast, fluid, and frictionless.

But as security teams are now discovering, "vibing" your way through development without grounding that code in security reality can open the door to real, systemic risk. And at the center of that risk is a new and quietly growing threat: slopsquatting. We’ll explore slopsquatting in detail later in this blog, but analogously think about it as a malicious exploitation of an AI’s hallucination to generate malicious code.

With the recent boom in vibe coding, the spectre of slopsquatting as a threat vector has risen over the past four to six weeks in the cyber zeitgeist. To paint a clearer picture: AI hallucinations + package managers + "vibe coding" = a new class of supply chain vulnerability that most organizations aren't prepared for yet.

Sounds bad right? It is…but not all is lost. Let’s dig in.

Vibe Coding’s Hidden Risk: AI Hallucinations and Slopsquatting

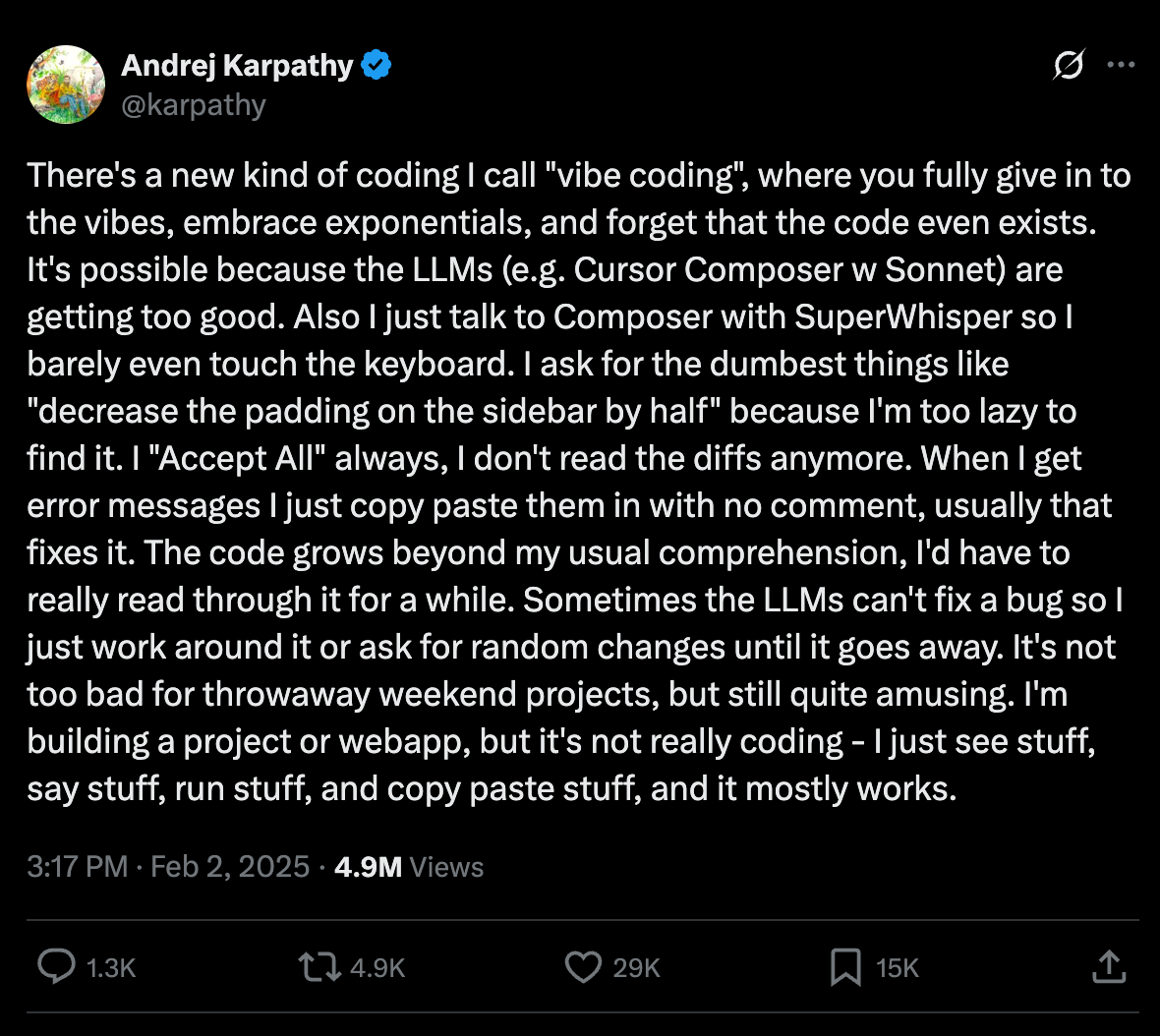

After Andrej Karpathy’s viral tweet, it’s almost impossible to turn anywhere and not see or hear about vibe coding.

…the tweet heard round the world…

Heck, “vibe” has been infiltrating other areas of the tech ecosystem, including here in security where (a few weeks ago) I introduced the concept of Vibe Hunting. It’s all about the vibes these days, isn’t it?

At the heart of vibe coding is a dependency on AI-generated code. But here's the catch: large language models don't always tell the truth. In fact, they "hallucinate" regularly, generating confident, plausible-sounding outputs that are entirely made up.

In a recent study of 16 popular AI coding models (both open and commercial), researchers found that:

Nearly 20% of all package recommendations from these models referenced packages that didn't actually exist.

Open-source models were the worst offenders—hallucinating fake packages in up to 33% of their outputs.

Even top-tier models like GPT-4 Turbo hallucinated packages in 3-5% of completions.

This brings us to slopsquatting.

What's Slopsquatting?

Slopsquatting is a new software supply chain attack that targets hallucinated package names. The term, coined by security researcher Seth Larson, is a play on "typosquatting," but the vector is different.

In typosquatting, an attacker preys on human error, registering a malicious package with a name like requests instead of requests, hoping someone fat-fingers their install command.

In slopsquatting, the attacker takes advantage of AI error, registering a malicious package with a name the AI made up but developers assume is real.

If you're copy-pasting code from an AI assistant and you see import fastxml, it might look legitimate. You go to install it. If the name didn't exist before, it might now, because an attacker saw that fastxml was a common hallucination and preemptively published a malware package under that name. From there, it's game over.

You've just vibe-coded your way into a breach.

How Slopsquatting Works in the Wild

In recent controlled experiments, researchers ran half a million prompts through different AI models and collected over 200,000 hallucinated package names. Then they checked to see how often the same fake names reappeared.

The results were disturbing:

In 43% of test runs, the same hallucinated package name showed up every time for a given prompt.

58% of fake names showed up in multiple runs.

Many hallucinations followed real naming conventions like fastmath, secureparser, autologger.

These aren't random strings. They're credible, AI-favored, and reproducible, which makes them gold for attackers. With just a bit of scraping and monitoring, a threat actor can identify the most common hallucinated package names and register them, complete with malicious code included. From there, it's just a matter of time before an AI assistant hands that package name to an unsuspecting dev.

It's not a theoretical risk. It's proof-of-concept ready and already being exploited in adjacent vectors.

Just last month, a popular LLM-integrated coding tool suggested a typosquatted package (@async-mutex/mutex) to users in a Google search snapshot. That package turned out to be malware. The hallucination wasn't even required, the AI simply surfaced a bad package.

Now imagine if the package didn't exist yet, and the AI made it up. That's slopsquatting.

Why Slopsquatting Feeds on Vibe Coding

Vibe coding magnifies the risks of slopsquatting in three big ways:

1. Blind Trust in the Output

When you vibe code, you often treat AI suggestions as a baseline starting point. You assume the package exists. You assume the function signature works. You assume the AI knows what it's doing.

Most of the time, that's true enough to be productive. But assumptions are where attackers live.

2. Speed Over Scrutiny

AI accelerates development, which is a good thing, until it skips necessary steps like dependency validation. Developers may not stop to verify that the package the AI suggests is real, safe, or maintained.

Install now, troubleshoot later.

Except sometimes later means incident response.

3. Automation Drift

In some orgs, AI tools are now wired directly into the pipeline by auto-generating code, updating packages, even managing dependencies. That creates a dangerously short feedback loop between AI suggestion and code deployment.

If a hallucinated package slips through, it may go live before anyone even realizes it's not real.

Security Implications for Organizations

From a security leader's perspective, slopsquatting introduces a new category of risk: one that combines AI unpredictability with the known perils of supply chain attacks. Let's break it down.

New Threat Surface

Hallucinated package names are essentially a new attack surface—one that only exists because AIs are confidently making up components that don't exist yet. That means developers can be tricked into installing malware not because they made a typo or downloaded something shady, but because they followed AI instructions.

Low-Cost, High-Yield for Adversaries

It's cheap to register a package on PyPI or npm. It's cheaper still to use AI monitoring tools or prompt fuzzing to discover common hallucinations. That makes slopsquatting a low-cost, potentially high-yield attack vector—especially if a single hallucinated package name spreads across multiple orgs.

Real-Time, One-to-Many Exploits

This is where it gets dangerous. AI suggestions are repeatable at scale. If one hallucinated package name shows up often enough, and a malicious version is published, hundreds of developers could copy-paste their way into compromise within hours.

In that sense, slopsquatting is the AI-era version of phishing: scale-driven, trust-based, and opportunistic.

Start Where You Are: Quick Wins for Resource-Constrained Teams

Not every security team has the budget for enterprise tools or dedicated headcount. If that's your situation, don't worry. You can still build effective defenses against slopsquatting with these practical, low-cost measures.

First, establish basic package verification workflows. Before any package gets installed, whether suggested by a developer or an AI, it should pass some simple checks. Is the package more than 24 hours old? Does it have a GitHub repository? Any documentation at all? These red flags can catch many slopsquatting attempts before they infiltrate your codebase.

Next, embrace dependency locking as your first line of defense. By enforcing lockfiles like package-lock.json or requirements.txt in version control, you ensure that builds only use packages you've already vetted. It's a simple practice, but it prevents that accidental installation of a malicious package that was just published five minutes ago. Consider blocking CI runs if lockfiles are missing or haven't been updated in the last 30 days.

Perhaps most importantly, configure your AI tools with a zero-trust mentality. Treat AI suggestions like code from an untrusted contributor: helpful, but requiring verification. Configure your development environment to highlight uncertain completions and flag any package names that don't exist in the registry before allowing installation.

Finally, don't underestimate the power of education. A single lunch-and-learn session on slopsquatting, typosquatting, and dependency confusion can transform your developers from unwitting victims into your first line of defense. Share real-world examples of supply chain attacks. Nothing drives the point home like seeing how a single malicious package brought down a major company.

Building Maturity: The Comprehensive Approach

As your resources grow, you can build toward a more sophisticated defense strategy. This isn't about replacing your quick wins; it's about enhancing them with additional layers of protection.

Advanced package verification goes beyond basic checks. Create comprehensive workflows that examine not just whether a package exists, but whether it's actively maintained, well-documented, and trusted by the community. Implement automated systems that analyze package metadata, looking for red flags like sudden maintainer changes, suspicious version bumps, or unusual installation scripts.

The next evolution is building your own package infrastructure. Setting up an internal mirror or proxy (tools like Sonatype Nexus OSS are free and robust) gives you complete control over what packages enter your environment. Route all installations through this vetted mirror, and suddenly that AI hallucination can't install anything malicious. If it's not in your mirror, it doesn't get installed. This forces a manual review process for any new dependencies, which might seem like friction but is actually protection.

Integrated security scanning transforms your defenses from reactive to proactive. Deploy tools like Socket, Snyk, or the free OWASP OSV-Scanner to continuously analyze your dependency tree. These tools can detect known vulnerabilities, analyze installation scripts for malicious behavior, and even identify packages that are suspiciously similar to popular libraries. Integrate these scans into your pre-commit hooks and CI/CD pipeline to catch issues before they ever reach production.

Don't forget about your CI/CD pipeline itself. Enhanced controls here can prevent many attacks. Implement strict dependency pinning across all ecosystems, automate lockfile validation, and create policies for how old dependencies can be before requiring updates. Establish approval workflows for adding new packages. Yes, it adds a step to the process, but it also adds a crucial human checkpoint that AI can't bypass.

Finally, build security into your culture through continuous education. Develop comprehensive training programs that cover not just the technical aspects of supply chain attacks, but also the evolving tactics of attackers. Create security champions within each development team who can spot potential issues early. Regular awareness updates keep everyone informed about new threats, and integrating security considerations into your AI adoption policies ensures that innovation doesn't come at the expense of safety.

Implementation Roadmap

Phase 1 (Week 1-2): Quick Wins

Implement basic package verification

Set up dependency locking

Configure AI zero-trust settings

Conduct initial developer training

Phase 2 (Month 1-3): Foundation Building

Deploy internal package mirror

Integrate basic security scanning

Enhance CI/CD controls

Expand training program

Phase 3 (Month 3-6): Maturity

Full security tool integration

Advanced workflow automation

Comprehensive policy framework

Continuous improvement processes

Implementation Roadmap

Phase 1 (Week 1-2): Quick Wins

Implement basic package verification

Set up dependency locking

Configure AI zero-trust settings

Conduct initial developer training

Phase 2 (Month 1-3): Foundation Building

Deploy internal package mirror

Integrate basic security scanning

Enhance CI/CD controls

Expand training program

Phase 3 (Month 3-6): Maturity

Full security tool integration

Advanced workflow automation

Comprehensive policy framework

Continuous improvement processes

Where Do We Go From Here?

Slopsquatting is just one example of how AI hallucinations, combined with developer trust and automation, can short-circuit traditional security assumptions. As we navigate this new development paradigm, the solution isn't to abandon AI, it's to embrace it responsibly.

If your team is using AI to write code, start with these fundamentals:

Build AI usage policies that include dependency hygiene

Incorporate hallucination detection or package validation in your CI/CD process

Educate developers about slopsquatting and similar risks

Treat vibe coding as something that needs security approval, just like any third-party dependency

Back to my rhetorical statement at the beginning of this week's blog, not all is lost.

The first step towards confronting an issue is awareness, and we are aware of the risks that vibe coding brings. We're in the early days of a new development paradigm. But just like we learned with open source, the sooner we align productivity with secure practices, the better off we'll be.

Stay curious and stay secure, my friends.

Damien